Significance Of Time Complexity

'What is an Algorithm?'

‘Algorithm’ in computer programming, is a finite sequence of well-defined instructions, typically executed in a computer, to solve a class of problems or to perform a common task i.e., there needs to be a sequence of defined instructions that have to be given to the computer to execute an algorithm to perform a specific task. There can be hundreds of different algorithms to perform a single specific task. The result of the variation depends upon the programming language chosen, operating system, processor, hardware, etc. that are used. Now that we know different factors can affect the outcome of an algorithm if being executed, it is wise to understand how efficiently such programs are used to perform a task.

Let us assume we cannot change the computer we are using or the programming language, what should we do then to make our program more efficient. To do this, we are required to evaluate the ‘Time complexity’ of an algorithm.

Now, what is Time Complexity and Significance of Time Complexity?

In Simple terms, Time Complexity is the amount of time taken to execute a program and perform the function of the length of the input. It doesn’t tell about the total execution time of an algorithm. It gives information about the variation (increase or decrease) in execution time when the number of operations (increase or decrease) in an algorithm. Now that we have got some idea of why Time complexity is so significant. We will slowly move to a little more advanced portion but learn in easy language.

Before that, let’s understand by an example-:

Suppose you are searching your copy in a pile of 100 copies arranged numerically. Your roll no. is 55 and you want to get your copy asap.

There are two ways to find your copy:

1. You will search for your copy one by one from 1 till 55.

2. Or you will take a few copies and check the roll no. (say) it’s 30. Now you will compare 30 with 55, obviously it comes before your roll no. so you will proceed further without searching in the first 30 copies and so on. Repeat the process till you find your copy.

You will obviously choose the 2nd option because it is more time relevant. In this way time complexity helps us to choose the correct algorithm for our code to make it work faster.

Many factors can affect the variation of algorithm execution as said earlier from Hardware to software. It is important to understand, how effectively programs are used to perform a task, and to gauge that we require to evaluate both space and time complexity of an algorithm. Touching Space Complexity of an algorithm defines the amount of space or memory taken by an algorithm to run as a function of the length of the input.

Let’s try to understand the time difference in more detailed way by taking some example in different programming languages…

EXAMPLES WITH CODE AND OUTPUT

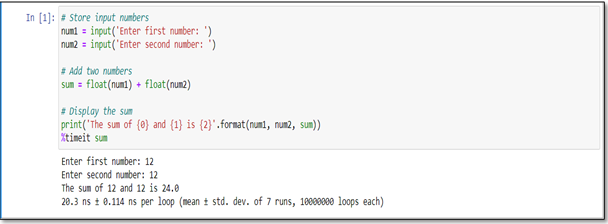

Python-:

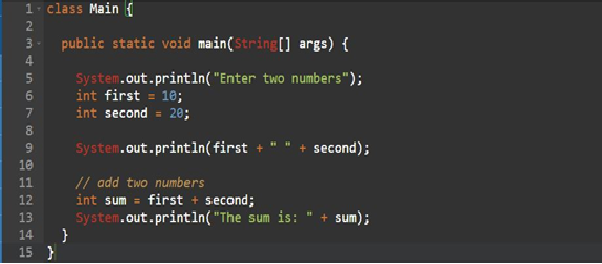

Java-:

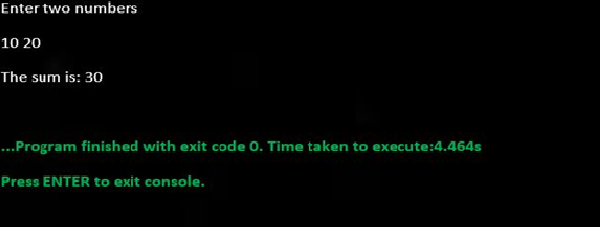

C Code-:

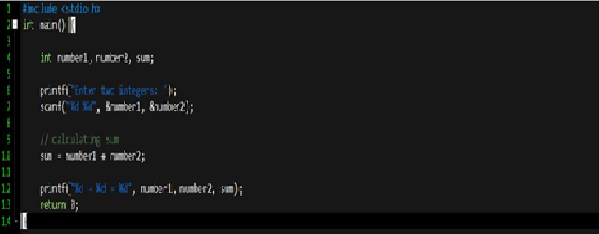

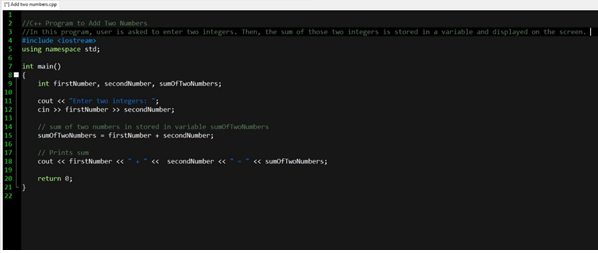

C++ Code-:

Here we have seen the time taken by different programming languages.

So now we have an idea of how time complexity varies for different languages. Based on this now we will get into types of notations used in describing time complexity.

Types of Notation used in time complexity-:

Before getting on to the notation used for time complexity, let us know about the different types of notations used to describe the behaviour of an algorithm. We use different types of notations to describe the running time of an algorithm by identifying its behaviour as the input size for the algorithm, these are called Asymptotic Notations.

The running time of an algorithm falls under three conditions:

1. Best Case: Big-Ω Notation

The notation Ω(n) simply expresses the lower bound of an algorithm's running time. The shortest time taken to complete an algorithm, thus implying best case

2. Worst Case: Big-Ο Notation

The notation Ο(n) simply expresses the upper bound of an algorithm's running time. The longest time to complete an algorithm, thus implying a worst case.

3. Average Case: Big-θ Notation

The notation θ(n) expresses the time taken in between both the lower bound and the upper bound of an algorithm's running time. Thus, implied an average between best and worst case.

These are the notations used to describe the limiting behaviour of an algorithm. Since we described time complexity as the total time that an algorithm takes to completely execute itself, therefore Big-Ο Notation is used to represent time complexity.

Therefore, the time complexity of the above examples will be O(1). Since the operation itself is a constant and the input is fixed.

Evaluation of Time Complexity-:

We have learnt about how the order notation is given to each algorithm between runtime vs input size. Now, it is time to know how to evaluate the Time complexity of an algorithm based on the order notation it get and compute the total time required to run an algorithm.

Let us illustrate how to evaluate the time complexity of an algorithm with an example:

The Binary Search algorithm is defined as:

1. We are given an input array that is supposed to be sorted in ascending order.

2. We take two variables which will act as a pointer i.e., str and end.

3. Str will be assigned with 0 and the end will be assigned to the last index of the array.

4. Now we will introduce another variable mid which will mark the middle of the current array such as (str + end)/2.

5. If the element is present at the mid index and is equal to the element to be searched, then just return the mid index.

6. If the element to be searched is smaller than the element presents at the mid index, make end = mid-1, and all RHS will be discarded.

7. If the element to be searched is greater than the element presents at the mid index, make str = mid+1, and all LHS will be discarded.

To solve this, let's take k to be the number of iterations in which the binary search gets terminated.

In a binary search algorithm, the array taken gets divided by half at every iteration. If n is the length of the array at the first iteration, then at the second iteration, the length of the array will be n/2. Again, dividing by half in the third iteration will make the array’s length n/4 i.e., n/(2^2). Similarly, at the fourth iteration, the value of the array’s length will be n/(2^3) and so on. At the kth iteration, the value of the length of the array will be (n/2^k).

After k iterations, the length of the array becomes 1.

Therefore,

n/(2^k) =1

=> n = 2k

Applying log function on both sides:

=>log(n) = log (2^k) (log with base 2)

=>log (n) = k log (2) (log with base 2)

As (log (a) = 1) (log with base 2)

Therefore,

=> k = log (base 2) (n)

Therefore, the time complexity of the Binary Search algorithm is Ο(log(base2)

Types of Time Complexity used-:

1. Constant Time-: O(1)

It is said to be constant time as it doesn’t depend on input size n.

2. Linear Time-: O(n)

It is said to be linear time complexity if the running time increases linearly with the length of input.

3. Logarithmic Time-: O (log n)

It is said to be Logarithmic time complexity when it reduces the size of the input data in each step.

4. Quadratic Time-: It is said to be Quadratic time complexity where the running time increases non-linearly (n^2) with the length of the input.

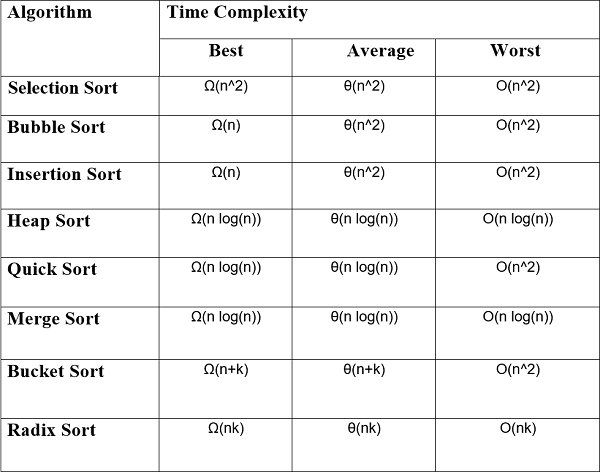

So, we have discussed almost every aspects topic of Time Complexity, now let’s get to the last topic that is

“Sorting Algorithms in time Complexity”

Summary-:

So, here in this article we have learnt “Significance of Time Complexity” from basic to intermediate level. We have discussed What is an Algorithm? What is Time Complexity? Significance of Time Complexity, Different types of Notation used in Time Complexity, Evaluation of Time Complexity and Sorting Algorithm in Time Complexity. We also learnt to assign different notation for algorithm based. Also, we have seen how different algorithms run time is differ from each other.

If you have learnt something new in this blog, do share it and do read our “Significance of Space Complexity”